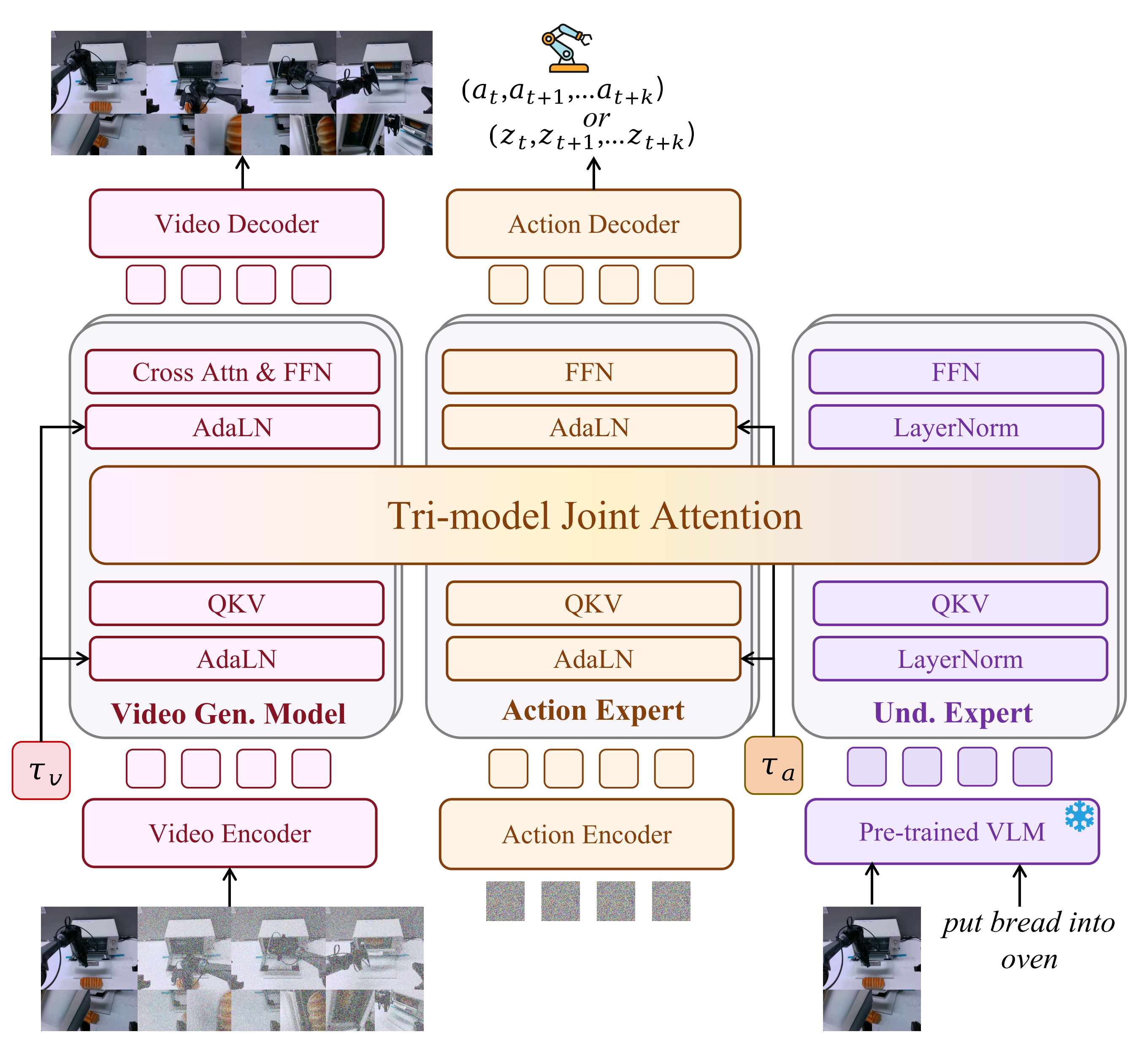

Motus is a unified latent action world model that leverages existing pretrained models and rich, sharable motion information. Motus introduces a Mixture-of-Transformers (MoT) architecture to integrate three experts (understanding, action, and video generation) and adopts a UniDiffuser-style scheduler to enable flexible switching between different modeling modes (World Models, Vision-Language-Action Models, Inverse Dynamics Models, Video Generation Models, and Video-Action Joint Prediction Models). Motus further leverages optical flow to learn latent actions and adopts a three-phase training pipeline and six-layer data pyramid, thereby extracting pixel-level "delta action" and enabling large-scale action pretraining.

Motus: A Unified Latent Action World Model

*Joint first authors †Joint project lead

Framework Overview

Motus Architecture

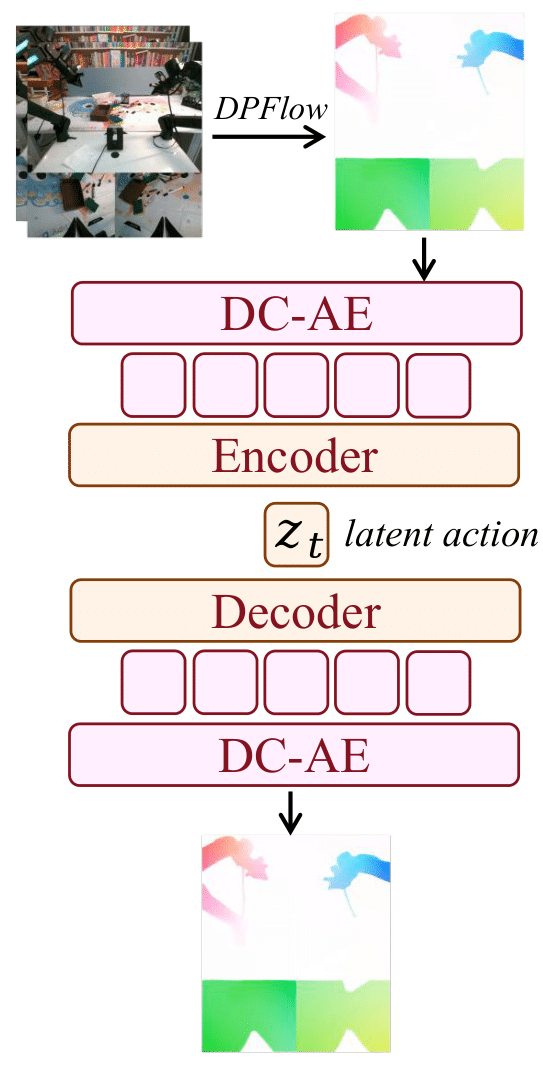

Latent Actions

To leverage large-scale heterogeneous data, we introduce latent actions that encode motion directly from optical flow. DPFlow computes pixel-level displacements between frames, which are then compressed via a deep convolutional variational autoencoder (DC-AE) and a lightweight encoder, enabling the model to learn cross-embodiment motion priors from diverse video sources.

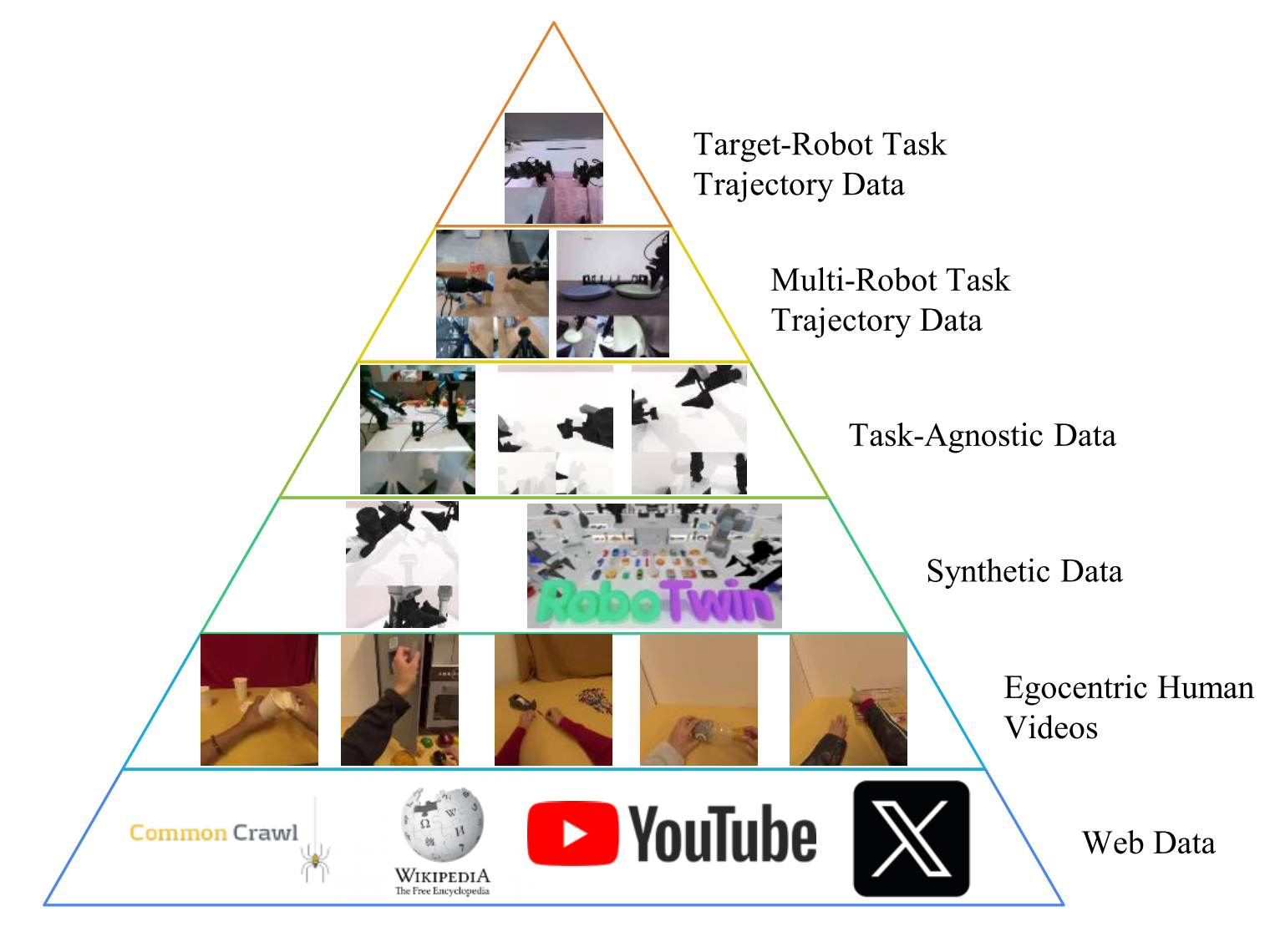

Data Pyramid

Three-Stage Training Recipe

Stage 1 (VGM Training)

Trains the VGM with embodied data.

Stage 2 (Motus Pretraining)

Pretrains the Motus model with latent actions.

Stage 3 (Motus SFT)

Fine-tunes the Motus model on target-robot trajectories.

| Stage | Data | Training |

|---|---|---|

| Pretrained Foundation Models | Level 1: Web Data | VGM and VLM |

| Stage 1 (VGM Training) | Level 2: Egocentric Human Videos Level 3: Synthetic Data Level 5: Multi-Robot Task Trajectory |

Only VGM |

| Stage 2 (Motus Pretraining) | Level 2: Egocentric Human Videos Level 3: Synthetic Data Level 4: Task-agnostic Data Level 5: Multi-Robot Task Trajectory |

Motus (all 3 experts, with latent actions) |

| Stage 3 (Motus SFT) | Level 6: Target-Robot Task Trajectory | Motus (all 3 experts, with actions) |